This is the final piece of the med series.

TL;DR

Yes, I am throwing the buzzword “reasoning” at you, and on top of that, I claim I want to build a fancy “medical diagnosis agent” with it. The ideology presented here is largely inspired by three things:

- The necessary condition: Zhang Xiangyu’s talk on multimodality & reasoning, which had me believe reasoning is necessary for image understanding.

- The sufficient condition: OpenAI’s O3 results, which convinced me that an LLM has the ability to reason and plan

- The approach: O3 place detective blog, which made me realize this approach will work

The following are just my logical steps and specifics of the claim.

The logic is pretty straightforward:

- The current way of “solving” imaging tasks is running into scalability issues in two ways: a. The data scarcity issue: As the performance of the model increases, it’s actually harder to curate more diverse data and to label it. b. No matter how well we develop a model in one domain, it’s pretty much a one-time deal; its transferability to other applications is rather limited.

How?

The approach involves building a multimodal reasoning system that combines specialized vision models with language model planning and reasoning capabilities.

What is an agent?

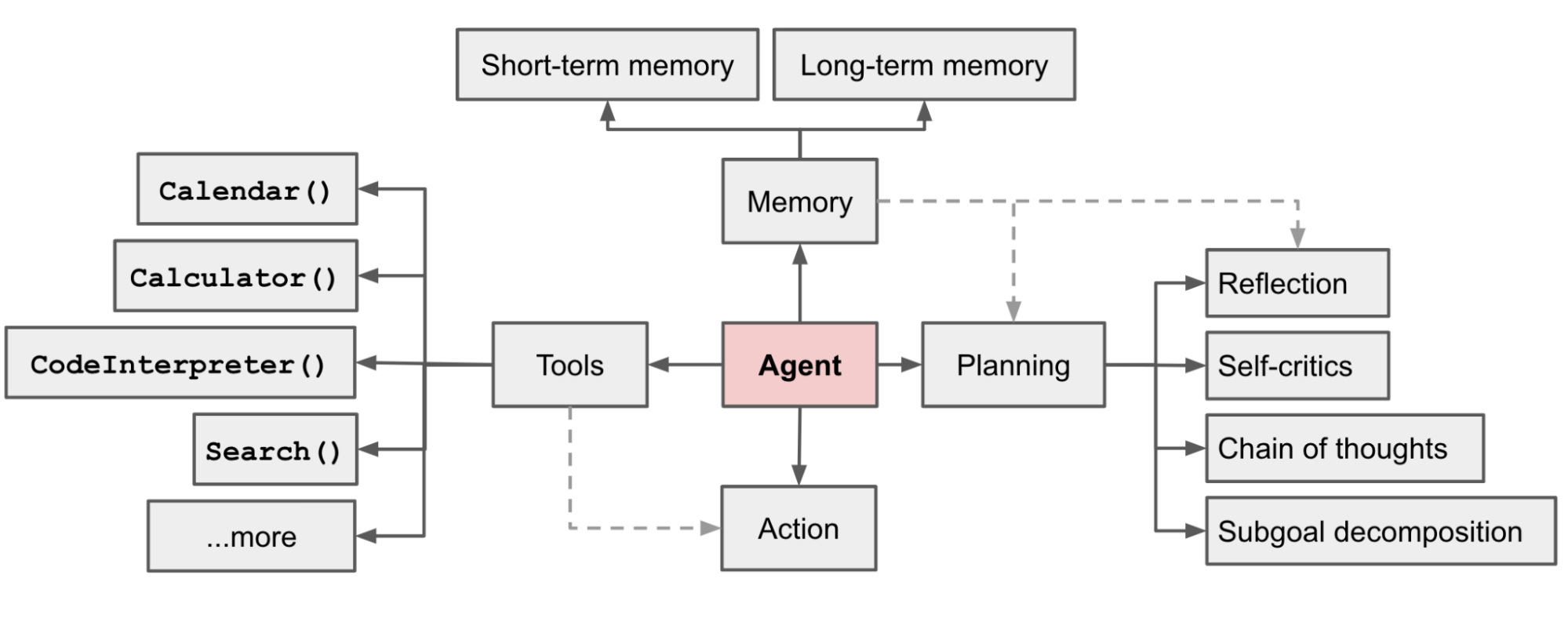

The term “agent” has been overused and misused, just like “AGI”. I would rather follow Lilian Weng’s definition 1 . She framed the agent as an autonomous problem-solver with four major components: memory, planning, tool use, and action.

-

Memoryis still an active area of research. Most working solutions choose to use a simpler solution of filtering, summarizing, or concatenating the previous context. I am very much interested in a more generic/elegant solution, but I do not have one to offer here. -

Planningseems to be a more settled topic, as reasoning and a long context really enable an LLM to craft a list of executable steps. -

Toolsenable the agent to call upon external resources to perform actions and were popularized by MCP. -

Actionused to be troublesome but has gotten much better with reasoning and better models.

How does it work?

The diagnostic agent combines vision models and language models in a structured workflow:

- Vision tools extract specific findings from medical images

- Language model plans diagnostic reasoning steps

- Memory maintains context across a multi-step analysis

- Actions execute diagnostic protocols and follow-ups

Paradigm Comparison

| Paradigm | Medical Relevance | Data Format | Annotation Challenge | Advantage | Disadvantage |

|---|---|---|---|---|---|

| Vision-Only Model | Medical findings | Raw image | Labeling quality | Works well and runs fast | Hard to transfer and scale |

| MLLM | Medical findings and impressions | Image and report pair | Curating/synthesizing reports | Scales well across tasks and domains | Not very robust; has been reported to have hallucinations and be short-sighted |

| Agent | Medical findings, impressions, and diagnosis | Image, CoT/RL with diagnosis | Cold start with report-synthetic CoT -> self-adapted RL | Scales well across tasks and domains | Complex to implement and validate |

Benefits

The agent approach combines the best of both worlds:

- To show: How and what

- To tell: Contexts and system prompts allow changes in behavior. https://www.dbreunig.com/2025/05/07/claude-s-system-prompt-chatbots-are-more-than-just-models.html

Watch-Outs

We are making the assumption that “knowledge” and “skills” are composable and complement each other. This requires careful validation in clinical settings.

Final Thoughts

By this point, I hope you understand what I am trying to build:

- Vision models alone are limited: Data and annotation constraints force narrow specialization

- Language models provide reasoning: They excel at planning, memory, and action coordination

- CV models become tools: Repositioned as specialized instruments within a broader system

The beauty of this approach:

- Extensible: More modalities = more tools

- Transferable: Cross-checks between regions, modalities, and follow-ups

- Scalable: Potential to move from diagnosis to prognosis

To excel at doing ONE task, we may have to do well in ALL tasks.